NUMA Node Balancing

For those of you running Hyper-V on NUMA (Non-Uniform Memory Architecture) based platforms (such as HP’s DL785), this blog presents a tuning suggestion for how to fine tune the placement of virtual machine (VM) on to a specific NUMA node.

Every VM in Hyper-V has a default NUMA node preference. Hyper-V uses this NUMA node preference when assigning physical memory to the VM and when scheduling the VM’s virtual processors. A VM runs best when the virtual processors and the memory that backs the VM are both on the same NUMA node, since such “remote” memory access is significantly slower than “local” access.

By default, the Hypervisor will assign the NUMA node preference every time the VM is run, and when choosing the NUMA node, it will find the best fit based on the resources the VM needs – preference will be given to a NUMA node that can support the VM’s memory on locally available node memory.

Obviously, the Hypervisor can’t predict the RAM or CPU usage needs of VMs that haven’t been created yet. It may have already distributed the existing VMs across the NUMA nodes, fragmenting the remaining NUMA resources such none of the nodes can’t fully satisfy the next VM to be created. Under these circumstances, you may want to place specific VMs on specific NUMA nodes to avoid the use of remote NUMA memory or alleviate CPU contention.

Consider the situation with the following 4 VMs:

· Test-1, configured with 2 virtual processors and 3 GB of memory

· Test-2, configured with 4 virtual processors and 4 GB of memory

· Test-3, configured with 2 virtual processors and 3 GB of memory

· Test- 4, configured with 1 virtual processors and 1 GB of memory

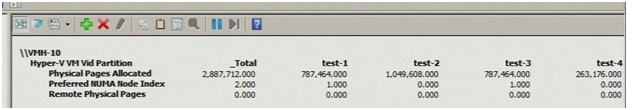

The partial screen shot below for Performance Monitor (PerfMon) show how the Hypervisor has nicely balanced the 4 virtual machines across 2 different NUMA nodes (see “Preferred NUMA Node Index”).

Notice how Hyper-V has balanced on the VMs across the two NUMA nodes, two VMs on node 0, and two VMs on node 1. In this case the memory requirements of each VM can be met by the local node memory (“Remote Physical Pages” is zero for all the VMs).

Now consider the case where I wanted test-2, my biggest, memory and CPU hungry VM to have its own dedicated NUMA node. How can this be achieved?

The trick is to set the NUMA node preference for the VM. In this way when the VM is started, Hyper-V will use the NUMA node preference for the VM.

Tony Voellm previously wrote a blog posting about setting and getting the NUMA node preferences for a VM (https://blogs.msdn.com/tvoellm/archive/2008/09/28/Looking-for-that-last-once-of-performance_3F00_-Then-try-affinitizing-your-VM-to-a-NUMA-node-.aspx).

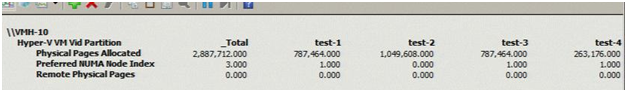

By using Tony’s script, I can explicitly set the preference appropriately to force test-2 to always be on first NUMA node and all the other machines (test-1, test-3, and test-4) on the second NUMA node (e.g. “numa.ps1 /set test-2 0”, “numa.ps1 /set test-1 1”, “numa.ps1 /set test-3 1”, “numa.ps1 /set test-4 1”).

Setting the preference take affect when the VM is started, so if you set the preference on an already running VM, the VM will need to be restarted for it to take effect. And the preference moves with the VM, so if the VM is moved to another host, either by Quick Migration, or Live Migration, the preference will take effect on the new host.

After restarting the VMs (so that my preference to took effect), you can see in the partial screen shot from PerfMon below that test-2 is only node 0, and the other VMs are on node 1.

Now whenever the test-2 VM is started, it will also be assigned to NUMA node 0, regardless of what is already running on node 0.

Finally a word of caution: be careful when choosing to set the NUMA node preference of a VM; if all the VMs are assigned to the same NUMA node, the VMs may behave poorly if they cannot get enough CPU time, or if there isn’t enough local memory on the NUMA node for the new VM, the VM won’t even start.

Tim Litton & Tom Basham

WIndows Performance Team