Dryad Beta Program Update

We’re happy to announce that as part of the beta for Microsoft HPC Pack 2008 R2 SP2 we’re shipping a beta of the project code-named “Dryad.” Dryad is Microsoft’s solution for “Big Data”. What’s Big Data? Today’s environment is full of ever growing mountains of data. From web logs and social networking feeds to fraud detection and recommendation engines or large science and engineering problems like genomic analysis and high energy physics. Big data is becoming a more and more common scenario. .

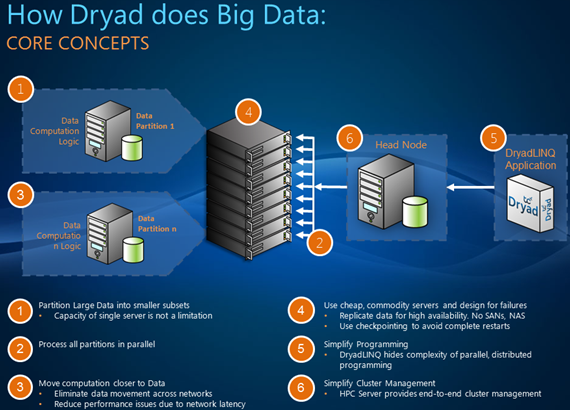

Dryad is designed to help you ask questions of big, unstructured, data. It allows you to distribute your data across an HPC cluster built from commodity hardware and execute queries against that data. Dryad is designed to achieve the best possible performance by exploiting data locality. Data locality means that Dryad executes query logic as close as possible to the data, rather than moving the data to the logic.

Dryad’s Distributed Storage Catalog (DSC) maintains a list of where data is distributed across the cluster and works in concert with the HPC Scheduler and Dryad’s Graph Manager to maximize data locality as the query executes.

Let’s take a quick look at the key features of Dryad…

Integration with Windows HPC Server

Dryad is part of HPC Pack 2008 R2 SP2 Enterprise. It installs as part of the HPC Pack on your cluster and you configure which nodes are storing Dryad data and you’re done. Dryad jobs are managed through the HPC Cluster Manager, just like MPI and SOA jobs. In addition there are command line tools to help administrators move data onto the cluster and to manage data.

For example suppose you had a very large collection of documents and wanted to look for the most common words within those documents. First you could use Dryad’s built in data administration looks to load data from your MyBooks folder on a share into Dryad:

DSC FILESET ADD \\MyServer\Shared\MyBooks MyWordData /service:MyHeadNode

As the data is loaded DSC distributes it across the cluster. You can use commands like DSC FILESET LIST to examine you data or view the DSC file set using the Windows Explorer.

A Familiar Programming Model with LINQ

Now the data is loaded how do I count the words? Dryad uses a LINQ based programming model to enable developers to express their queries using a familiar syntax. Behind the scenes Dryad does all the heavy lifting of creating a query plan, deploying your assemblies and executing the query in a scalable and robust way.

If you’re familiar with LINQ then writing DryadLINQ queries is very straightforward. Simply create an HpcLinqContext instance and execute a query.

Now from within a Visual Studio C# project create a HpcLinqContext that refers to your cluster head node:

HpcLinqConfiguration config = new HpcLinqConfiguration("MyHeadNode");

HpcLinqContext context = new HpcLinqContext(config);

Next create a query:

IQueryable<Pair> results = context.FromDsc<LineRecord>(inputFileSetName)

.SelectMany(l => l.Line.Split(new []{ ' ', '\t' },

StringSplitOptions.RemoveEmptyEntries))

.GroupBy(word => word)

.Select(word => new Pair(word.Key, word.Count()))

.OrderByDescending(pair => pair.Count)

.Take(200);

Dryad leverages the LINQ programming model rather than forcing you to rethink your query in terms of a particular pattern like MapReduce.

Finally print the results. This will cause the query to be executed on the cluster.

foreach (Pair result in results)

Console.WriteLine(" {0,-20} : {1}", result.Word, result.Count);

As you’re job runs you can use the HPC Job Manager to view its progress, just like any other job running in HPC Server.

That’s it! Distibuted word count using Dryad in just a few lines of code. The word count example is just one of several samples we’re shipping with the beta to get you started. Others include; sort, k-means clustering, table joins and SQL connectivity to mention just a few.

Dryad Leverages Existing Proven Microsoft Technologies

While Dryad provides new features to address the challenges of Big Data it’s important to understand that it’s built on a series of proven technologies and was developed by Microsoft Research over several years. It has already been used internally, at scale within Microsoft on several large projects. It’s build on a series of mature technologies like NTFS and SQL Server and integrates with other parts of your enterprise infrastructure like Active Directory and builds on existing .NET tools like Visual Studio.

Please download and evaluate the HPC Pack 2008 R2 SP2 Beta and the accompanying documentation and samples. We’d be very pleased to hear your feedback on our DevLabs Forum.