Connectivity from the Datacenter to Azure: VPN, ExpressRoute, and Firewalls – Part 1

Is this situation becoming familiar in your organization?

Understanding the Solution Requirements

When it comes to connecting on-premises networking with Azure, first we need to understand what the solution requires, then we can move on to figuring out which concepts and features of Azure Networking to pursue. There are several areas that need to be discovered about the proposed solution as well as the existing environment.

Does the proposed solution require public Internet connectivity or will it be a simple network scheme in that only the on-prem users will be attaching to the service?

Will the solution require access to IaaS, PaaS or SaaS components or a mixture of the three?

Will this solution need to grow significantly in the future?

The initial questions all dealt with the future solution but what about those networking components that might already exist on-premises.

What type of DHCP and DNS is currently in use on-prem? Is DNS using Active Directory integration, how is DHCP handled per location and subnet today?

Does the I.T. team already use some type of I.P. Address Management solution? Do they already have a firm grasp of the I.P. schemes in use today?

The scenario at Contoso goes something like this:

Bob asks Fred what I.P. ranges they have available on their current network, if Fred doesn’t know either then it’s time to document the network better before thinking about new networks to add to the infrastructure with the cloud solution.

Does the customer require strong Intrusion Detection or Prevention?

What type of firewall technology is the current standard, how will that interface into the new solution?

Does the internal team handle network provisioning or is this handled by a vendor? Whomever is responsible, they need to be one present during the design and implementation for connecting to Azure networks.

Azure Virtual Networking and Cloud Services Overview

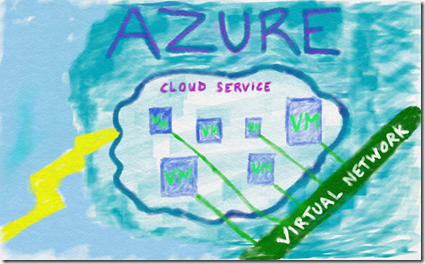

Each of the answers to the above questions hold the keys to unraveling the mystery of the proposed solution. Assuming that the project will require virtual machines (VMs) in the cloud, we need to understand the structure of virtual machine networking in Azure. A virtual machine or multiple virtual machines in Azure will always reside in at least one cloud service. Think of the cloud service like an envelope that contains the VMs and extends connectivity to these VMs through a single public facing I.P. address. The cloud service will perform load balancing across the VMs if so desired. The virtual machines also have access to virtual networks which are not public facing.

Azure Virtual Networks (VNETs) are created by the administrator of the subscription in Azure based on the requirements for the project. Each VNET can be connected to up to 2000 VMs. VNETs can be connected to VNETs as well. VNETs can be public or private I.P ranges but caution should be used when working with public I.P. ranges as these are non-routable from the public since the routing table entries for these ranges would be held at the customer’s on-premises routers and not in Azure’s tables. Each VNET can have multiple subnets. For more detailed information check out this great Azure Networking Fundamentals course on Microsoft Virtual Academy: https://www.microsoftvirtualacademy.com/training-courses/azure-networking-fundamentals-for-it-pros

Care should be taken when creating subnets as the number of devices(VMs) that can attach to these subnets will be locked in to that number, when subnets require changing all devices have to be removed from the subnet first. This can be a painful action to perform when production is running hot, so plan up front accordingly.

Virtual Networks allows the customer to configure CIDRs per subnet per address space. Multiple I.P. address spaces can be utilized, for instance 10.1.X.X/16 and 10.5.X.X/22 can be utilized within the same virtual network. Warning in advance, Azure takes 4 IP addresses from the CIDR by default so whatever amount of I.P. addresses you need, add 4 to it then proceed to create the subnet.

ExpressRoute technology is the latest addition to the Azure framework in terms of connectivity. With ExpressRoute you will need a CIDR of at minimum a /29 as there are two virtual paths established for redundancy. However if you ever plan to implement a VPN first then eventually move toward ExpressRoute then you could use a /29 but you would run out of I.P. addresses in the CIDR so it is best to go ahead and use a /28 from the beginning just to be safe.

Now as we said before each cloud service public facing I.P. address has a load balancer by default, however private virtual network I.P. addresses can use load balancers as well. For instance if you were to set up a SQL Server with Always On features in the solution. Windows Server network load balancing is not supported at this time, so the Azure load balancers have to come into play when architecting the solution. Multicast, unicast, or broadcast technologies are not supported within a virtual network. IPv6 is not yet supported.

When architecting a web app you may also need to consider the statefulness of your application. Web applications that maintain customer information across failed browser sessions for example will need to be architected so that the caching or login information is held centrally versus on single node servers in a multi-server web application. With all of that being said, we have three options for connecting the virtual networks to the on-premises network. The three options are VPN and two variations with ExpressRoute so let’s investigate those.

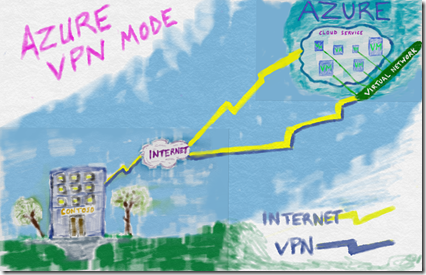

Azure Networking Option 1: VPN Tunneling

The first option we could have implemented for connectivity back to the on-premises datacenter from Azure VNETs is via VPN tunnel. With a VPN gateway configured and the equipment on-premises properly configured a traditional VPN tunnel is successfully connected. There are two options for VPN throughput, standard and performance. Standard mode VPN tunnels are advertised at 100mb/sec in speed with the traditional overhead of a VPN protocol, so realistically 70-80 mb/sec should be expected. Performance mode VPN tunnels are advertised at 200mb/sec with traditional VPN protocol overhead knocking that down a bit in true performance seen. VPN tunnels from Azure are priced on a Per Gateway Hour model. VPN routes are advertised directly over the tunnel to the adjoining router. For a great training video on Azure VPNs check out this virtual academy session:

https://www.microsoftvirtualacademy.com/training-courses/microsoft-azure-site-to-site-vpn

Firewalls Compatibility with Azure Virtual Network VPNs

Speaking of firewalls let’s now take a look at the supported firewall protocols and vendors when attempting VPN type connections. Currently Azure supports a variety of different combinations for connectivity via IPsec. IKE v1 and v2 setups are supported, both require pre-shared keys and provide AES256 bit encryption levels. For more complete details check out this page’s IPsec section for more details: https://msdn.microsoft.com/en-us/library/azure/jj156075#bkmk_IPsecParameters

This is just a current list of the many vendors that are supported when configuring a site-to-site connection within Azure:

- Allied Telesis

- Barracuda Networks

- Brocade

- Check Point

- Cisco

- Citrix

- Dell SonicWALL

- F5

- Fortinet

- Internet Initiative Japan

- Juniper

- Microsoft

- Palo Alto Networks

- Openswan

- WatchGuard

For a complete list including the model types and firmware versions plus the proper templates for implementing the connections check out this link:

https://msdn.microsoft.com/en-us/library/azure/jj156075