SQL Server Database Compression - speed up your applications without extra programming and complex maintenance

The database compression feature was introduced with SQL Server 2008 and is available in SQL Server's Enterprise and Datacenter Editions. Compressing SQL Server backups is available in all editions since SQL Server 2008 R2.

It's the performance gain, not the space reduction

The primary technical effect of running compressed SQL Server databases is the reduction in space consumption. This by itself isn't the important effect for most applications. Even though less disk space means less time that's needed for running backups, which is welcome for large databases.

The important effect of database compression is the performance gain when querying those databases. In an average database only a single digit percentage of all transactions consists of inserts, updates and deletes. Those don't get any faster with compression, but not noticeably slower either. The important effect with compression is, that the vast majority of reads in an average database benefits heavily from the performance improving effect of database compression.

The reason for this is the smaller number of disk pages that have to be read when processing a certain amount of data. A smaller number of pages read from disk means, that a smaller number of pages resides in the data cache for a certain query. This means, that more data can be cached, which again improves performance significantly.

Even CPU time gets reduced

For medium and large databases, even CPU usage goes down: The threshold, where the overhead for compressing and decompressing pages on the fly starts being smaller than the overhead of sorting out which pages to access, is reached very quickly. Maintaining the internal structures that are necessary to access large numbers of disk pages is considerable and drops with the smaller number of used disk pages being managed.

Up to 50% faster

The performance gain when compressing SQL Server databases depends on the type of data that is being compressed. We see an average of 50% time reduction when reading compressed tables and indexes.

This can be measured by design already, and doesn't need real-time monitoring: When reading the results that a query execution displays while SET STATISTICS ON has been activated, the number of logical reads drops drastically for a given query against compressed tables and indexes. Whether those pages are read from memory or disk is irrelevant, reading a smaller number of pages is always faster than reading a bigger number. Most database pages can't reside in cache forever and have to be read from disk at least now an then.

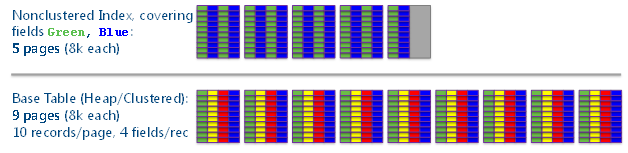

This is illustrated below:

The covering index A for query 'SELECT Green, Blue FROM Table' generates 5 disk page IOs without compression.

Running 'SELECT * FROM table' against the uncompressed base table results in 9 disk page IOs.

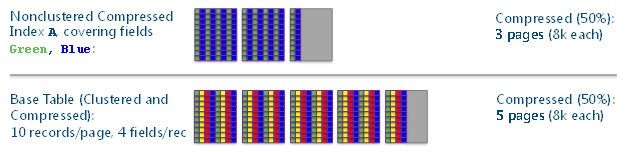

After compressiong the covering index A for query 'SELECT Green, Blue FROM Table', only 3 IOs (60% of the initial value) are generated.

Running 'SELECT * FROM table' against the compressed base table results in 5 IOs, 55% of the initial effort.

Compressing tables and indexes

Database compression is applied by table and/or index. Heaps, clustered and nonclustered indexes can be compressed. Either entirely or partition-wise. It's as simple as setting a flag per object:

ALTER TABLE tbl REBUILD WITH (DATA_COMPRESSION = compressionmode)

ALTER INDEX idx REBUILD WITH (DATA_COMPRESSION = compressionmode)

Where compressionmode is either PAGE or ROW.

PAGE compression first compresses by ROW, then by PAGE. When inserting data in a compressed table or index, it is only ROW compressed until the table or index is rebuilt.

The performance gain with PAGE compression is generally better, but not always, as the compression rate depends on the type of data that is compressed. Testing with actual data helps when deciding which option to use.

Updating compressed tables and indexes

Rebuilding and updating compressed tables and indexes will be slower than with uncompressed data. For the average database application, the overhead here is rather low and negligible.

What's in it for your application development?

Compressing SQL Server tables and indexes is transparent to any application that accesses a database. It also doesn't need extra maintenance, so the DBA's job doesn't get more complex either.

When tuning application or database performance, compression can come in handy: Instead of rewriting critical queries, compressing the tables and indexes that those queries access can already improve performance far enough. If expensive programming hours can be saved with a simple flag that has to be set on some database objects, why not?

Of course, optimal database design and application code is what everyone should aim for. But when a suboptimal situation is given and no time for manual tuning is available, SQL Server's database compression can bridge a gap and save a lot of money.

Detailed whitepaper

The excellent whitepaper at https://msdn.microsoft.com/en-us/library/dd894051(SQL.100).aspx describes database compression, its algorithms, performance implications and best practices in depth.

Down the road: Index compression in SQL Server Denali

For SQL Server's next major release, codenamed Denali, database compression will evolve to even higher compression rates. It's worth to get acquainted with the concept right now in SQL Server 2008 and 2008 R2, so you're ready for the next step once Denali is available.