MOSS 2007 databases corruption

I lately came across a situation where one of my customers suffers from corruption in the production MOSS content databases, most likely due to some SAN related corruption issue.

This corruption was only discovered after several weeks, and unfortunately the earliest usable backup dates from weeks ago. On top of this, the transaction logs that could be used to “replay” all database transactions starting from this last good backup, are also unusable.

The first thing to do would be to checkout the I/O subsystem - as it looks like that is what caused the problem. The damaged pages are contiguous, which usually is a symptom of I/O subsystem failure.

This customer came to me with questions about the way to fix this issue. The answer is pretty obvious: restore the last valid backup. Any operation on the MOSS databases would totally unsupported, which means that DBCC REPAIR_WITH_DATA_LOSS scenario may not be applied here.

I must stress that the only official and supported solution Microsoft is able to provide at this point, is to revert to the last usable database.

Basically, the only thing I have been able to do for this customer is helping them evaluate which kind of content is affected by the corruption.

So, what I have to do is identify which content is excatly affected by the corrption.

When looking at the DBCC CHECK output, I have the following:

DBCC results for 'AllDocStreams'.

Msg 8928, Level 16, State 1, Line 1

Object ID 293576084, index ID 1, partition ID 72057595341373440, alloc unit ID 72057594043957248 (type LOB data): Page (1:1760824) could not be processed. See other errors for details.

Msg 8965, Level 16, State 1, Line 1

Table error: Object ID 293576084, index ID 1, partition ID 72057595341373440, alloc unit ID 72057594043957248 (type LOB data). The off-row data node at page (1:1760824), slot 0, text ID 813336166400 is referenced by page (1:1760788), slot 0, but was not seen in the scan.

Msg 8928, Level 16, State 1, Line 1

Object ID 293576084, index ID 1, partition ID 72057595341373440, alloc unit ID 72057594043957248 (type LOB data): Page (1:1760825) could not be processed. See other errors for details.

Msg 8965, Level 16, State 1, Line 1

Table error: Object ID 293576084, index ID 1, partition ID 72057595341373440, alloc unit ID 72057594043957248 (type LOB data). The off-row data node at page (1:1760825), slot 0, text ID 813336166400 is referenced by page (1:1760788), slot 0, but was not seen in the scan.

Msg 8929, Level 16, State 1, Line 1

Object ID 293576084, index ID 1, partition ID 72057595341373440, alloc unit ID 72057595387314176 (type In-row data): Errors found in off-row data with ID 813336166400 owned by data record identified by RID = (1:1916095:6)

There are 62627 rows in 855 pages for object "AllDocStreams".

CHECKDB found 0 allocation errors and 5 consistency errors in table 'AllDocStreams' (object ID 293576084).

Based on this information, one thing we should do is to have a look at the rows that are damaged using the DBCC PAGE command

dbcc page ( {'dbname' | dbid}, filenum, pagenum [, printopt={0|1|2|3} ])

The filenum and pagenum parameters are taken from the page IDs that come from various system tables and appear in DBCC or other system error messages. A page ID of, say, (1:354) has filenum = 1 and pagenum = 354.

The printopt parameter has the following meanings:

· 0 - print just the page header

· 1 - page header plus per-row hex dumps and a dump of the page slot array (unless its a page that doesn't have one, like allocation bitmaps)

· 2 - page header plus whole page hex dump

· 3 - page header plus detailed per-row interpretation

Knowing this we run the following command for instance:

dbcc traceon (3604)

go

dbcc page (your_content_database_name, 1,1760824,3)

Go

dbcc traceon (3604)

go

dbcc page (your_content_database_name, 1,1760825,3)

go

Running this operation will dump the corrupted pages content. Based on this output we should able to identify which particular piece of content suffers from corruption.

The analysis of the output should provide valuable information in order to have a more precise evaluation of the risks and have a better understanding of the impact on the entire MOSS environment.

Having a look at the content of the SQL pages dumps, we have been to figure out the corrupted items are not always the current ones. It explains why the last published item is still accessible. However, when trying to access the version history of the item through the UI, we get an “unknow error” message due to the corruption of some of the item versions.

In no case it would be feasible to delete the database rows containing the corrupted versions of the item without globally breaking the integrity of the item.

This is actually an easy scenario since the corruption affects data stored in the data rows (IndexID 1 in the DBCC Check output). You might be facing some cases where the corruption affects the page header (IndexID –1 in the DBCC CHeck output) itself as below

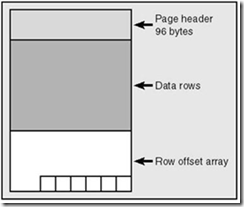

Consider how a SQL page is structured:

The clustered index is how the data is arranged physically; therefore there can be only one clustered index for a given table.

Internally if we need to add data, we do not shift everything on the page in order to insert it in the right place, we just use the slot array. Because there is only one slot array, this is the “physical” order of the data.

The corruption can affect the Page header, the Data rows or the Row offset array. Unfortunately, it looks like the index itself might be corrupted which is a scenario which makes the processing of the SQL page dumps way more difficult and complex: using DBCC IND in order to identify which page belongs to which object and so on…

In the latest example, some corruption occurred in the header and row offset array of the page as well. It means the following command cannot be run with the printopt parameter set to 3, which was the option that made the relevant information about particular SharePoint items available to us in the Horizon scenario:

dbcc page ( {'dbname' | dbid}, filenum, pagenum [, printopt={0|1|2|3} ])

Not being able to use the 3 switch is an additional demonstration of the fact that the data “metadata” is corrupted and the interpretation of the data is subsequently impossible.

Without being able to identify which SharePoint items are affected by the corruption, performing a repair on the database will leave this one in a state where the impact of the operation cannot be determined at all since we cannot match this data to any SharePoint equivalent object.

Conclusion

As a reminder, beyond the concerns expressed in this post, modifying the database schema or database structures is unsupported for additional reasons. Changes that you make to the database contents may be overwritten when you install updates or service packs for Windows SharePoint Services, or when you upgrade an installation to the next product version.

Regarded of the supportability, I would strongly advise to evaluate and implement a strong backup strategy, efficient proactive monitoring and a well-tested Disaster Recovery Planning in order to avoid or mitigate similar situations you might have in the future.