The Home Hyper-V Test Lab – On a Budget (Part 1)

A few people have commented that I should post about how my home Hyper-V lab is set up, so here it is.

In this blog series I'm going to show how I have my lab setup, which was designed to accomplish a few things

- Give me the maximum performance 'bang for buck'

- Utilise some existing hardware

- Provide a HA Hyper-V Cluster

- Automate tedious tasks like server provisioning and patching

- Take up as little desk space as possible

- Utilise 'out of box' Microsoft capabilities

DISCLAIMERS

Many things in this blog will NOT be Microsoft recommended practise. It's a home lab, so I can take some shortcuts, things you would never do in production. I'll do my best to call out when I've done this.

All equipment quoted are done so as examples only. This is how I've done it. I'm not saying it's the only way or the best way to do it, nor am I recommending one product over another.

Any prices quoted are in Australian Dollars, and are what I paid at the time. It might be less or more for you, depending on what and when you buy and your location

As this is a home lab, I care about two things, my money and my time. I don't want to spend more money than I have to. I don't know about you, but I would just as soon spend my free time playing games than studying and working in my lab. So I don't want to spend hours watching blue (or green) bars go across the screen if I can make that task quick, or automate it.

Part 1 will be about the hardware, storage and network setups. In future parts I'll cover off the provisioning and automation parts.

When I set this up, I set a budget of $3000 for compute, storage and network. For the purposes of this blog, I'll assume as an IT Pro, you have a TechNet or MSDN subscription, so I won't be including any software pricing.

I also have an existing Gaming rig that I wanted to utilise. That machine has to remain as a home system that can utilise HomeGroup and can function easily disconnected from the home network (when I take it to LANs). So that machine needed to stay out of the test lab domain and run a consumer Operating System.

One of the great things about Windows 8 Pro is client Hyper-V. This allows me to run some of the VMs outside of the primary cluster (I'll explain why that's handy latter). I won't go into huge details on the specs of this box, as it's pre-existing and not part of the $3000 budget, in brief it's a dual core i7 Intel and has 16Gb RAM, with a reasonable amount of storage. Just as you'd expect to find in any IT Pro's house as a gaming rig.

Storage

I didn't want to spend the money on any kind of NAS device, plus I wanted the flexibility to play around with SMB 3.0 clusters (a design I abandoned, I'll explain why in part 2). So I built a PC with storage, installed Windows Server 2012 and used storage spaces to carve up the disk and presented LUNs using the Windows iSCSI target software.

Hardware Specs and Cost

Hyper-V Nodes | Notes | ||

Case | 2 x Huntkey Slimline Micro ATX H920 | $109.44 each | Desk space the main reason. Nice half height case with PS included. |

MainBoard | 2 x ASUS P8h67-M | $104.02 each | Main reason, inexpensive board with 32Gb capacity and 1Gb on-board NIC |

CPU | 2 x Intel i7-3770 | $335.89 each | Dual core. No preference over AMD and Intel, the ASUS board pushed me down the Intel path. |

RAM | 8 x 8Gb Corsair 1600Mhz DDR3 | $203.70 per 32Gb | 32Gb per node |

HDD | 2 x Toshiba 500Gb 7200RPM | $83.44 each | Cheap and the smallest I could find. All the VM storage will be on the storage server |

NIC | 2 x Intel Pro 1Gb Adapter | $50.93 each | Inexpensive, great performance from a consumer adapter and half height bracket. |

Total for the hosts - $1774.84 – looking good to stay under the $3000 limit!

iSCSI Server | Notes | ||

Case | AYWUN A1-BJ | $53.10 | Inexpensive, with good amount of drive bays (had to loosen the desk space requirement on that one) |

MainBoard | ASUS P8H61-MX | $86.68 | Inexpensive, with on-board 1Gb Connection |

CPU | Intel i5-3330 | $219 | Storage box does not need a lot of CPU |

RAM | 2 x 4GB Corsair 1600Mhz DDR3 | $71.51 | |

HDD1 | 1 x Toshiba 500Gb 7200RPM | $83.44 | OS install drive |

HDD2 | 1 x 1TB Western Digital 64M 7200RPM SATA2 | $159.28 | Second performance tier storage |

HDD3 | 1 x 256GB Samsung SSD 840 Pro | $283.88 | Primary performance tier storage |

NIC | 2 x Intel Pro 1Gb Adapter | $50.93 each | Inexpensive, great performance from a consumer adapter. |

Total for the storage $1218.03 Total overall was $2991.87. I was able to keep it under the $3000 limit!

Here are a couple of photos of the build of the Hyper-V hosts. It will give you an idea of the case size.

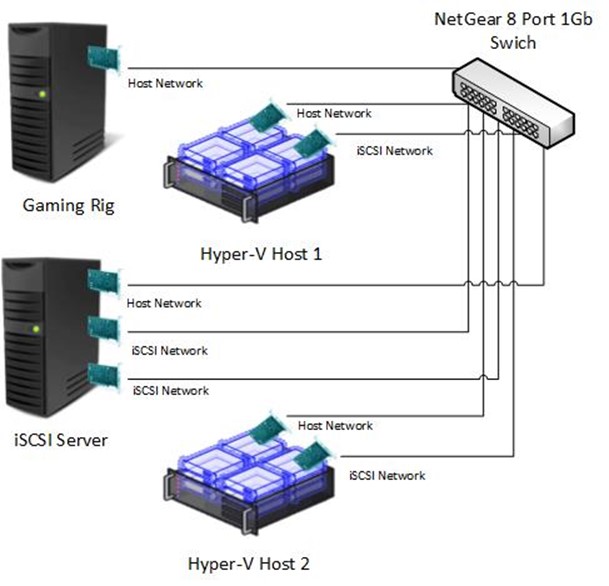

Networking

Each host has two 1Gb NICs, one for VM and host Traffic and one for iSCSI. The iSCSI Server has three 1Gb NICs, one for host traffic and two for iSCSI. This made a performance difference as illustrated in the performance testing section.

PRODUCITON WARNING Having all networks (Host, Guest, Cluster, CSV and Live Migration) all on the same 1Gb network is most certainly not recommended practise. I would hope, when implementing Hyper-V in Production, you would be doing so on server equipment that has better NICs than two 1Gb adaptors! For recommendations on Hyper-V Networking setups, please refer to the following TechNet article. https://technet.microsoft.com/en-us/library/ff428137(v=WS.10).aspx |

The following diagram illustrates the physical network setup for the lab. The Host Network is in a 10.100.0.x range and the iSCSI network is in the 192.168.0.x range. Putting the iSCSI in a different range is the easiest way to ensure those adaptors are used for iSCSI exclusively.

Network and Disk Performance Testing

Is it really worth having two NICs in the iSCSI server? How much difference will a SSD Drive make? I could have put 3 x 1TB spindle drives in for slightly more than the single SSD and single Spindle drive. How does the performance compare. Well, I've done both and tested it. Here are the results.

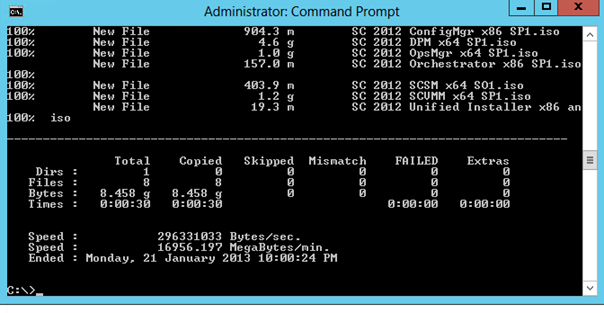

In the test I really wanted to determine the sweet spot for disk write. I was not so concerned about read (I'll explain why in part 2). The test involved taking approx. 8.5GB of data (all the ISOs for System Centre 2012) and copying to the iSCSI Server. This was done from a single Hyper-V host to the iSCSI Server to set a benchmark, then from both Hyper-V Hosts at the same time.

Test 1

Copy of test data from a single Hyper-V host to the iSCSI server over dedicated 1Gb link.

Configuration

Component |

Config |

Hyper-V Host NICs |

1 x dedicated 1Gb for iSCSI (one per host) |

iSCSI Server NICs |

1 x dedicated 1Gb for iSCSI |

iSCSI Server disks |

3 x 1TB 7200 RPM in a storage space (no mirroring and no parity) |

Copy Time

That was not the first copy, so the time of 30 seconds is little deceptive as there is some caching going on.

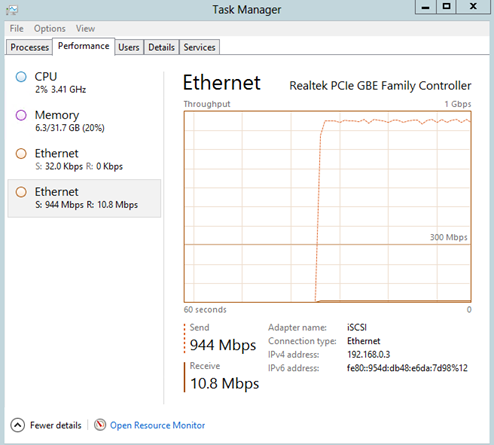

Network Util

As you can see, the throughput was excellent, and that level was sustained for the duration of the copy. I thought it was excellent given its consumer grade equipment.

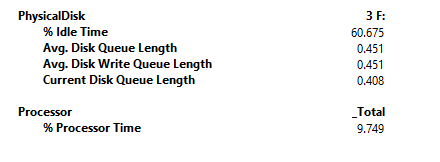

Disk Queue

The numbers to look at the Avg. Disk Queue and Avg. Disk Write Queue Length. If either are more than 5 (number of physical drives plus 2) then the copy is disk constrained. At a number of 0.451, we are nowhere near saturating the disks.

Test 2

Copy of test data from both Hyper-V hosts to the iSCSI server over dedicated 1Gb link at the same time. The copy was done to separate directories on the iSCSI Server.

Configuration

Component |

Config |

Hyper-V Host NICs |

1 x dedicated 1Gb for iSCSI (one per host) |

iSCSI Server NICs |

1 x dedicated 1Gb for iSCSI |

iSCSI Server disks |

3 x 1TB 7200 RPM in a storage space (no mirroring and no parity) |

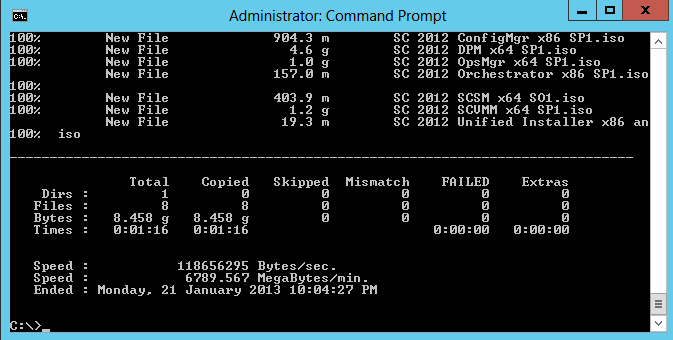

Copy Time

Same deal as the first, this was not the first copy run, so there is some caching. But the copy times have increased from 30 seconds to 1:16 on one host and 2:00 on the other.

Network Util

The screen shot from the other host is much the same. Hard up at half a Gb. This indicates the single 1Gb NIC on the iSCSI server is saturated.

Disk Queue

Double the previous numbers, but still not pushing the disk.

So from here it would seem that I am network bound. So adding an additional 1Gb NIC to the iSCSI server to give each host a dedicated 1Gb path (rather than a shared 1Gb path) will improve the performance. So, let's see what the testing says.

Test 3

Copy of test data from both Hyper-V hosts to the iSCSI server over two dedicated 1Gb links at the same time. The copy was done to separate directories on the iSCSI Server.

Configuration

Component |

Config |

Hyper-V Host NICs |

1 x dedicated 1Gb for iSCSI (one per host) |

iSCSI Server NICs |

2 x dedicated 1Gb for iSCSI |

iSCSI Server disks |

3 x 1TB 7200 RPM in a storage space (no mirroring and no parity) |

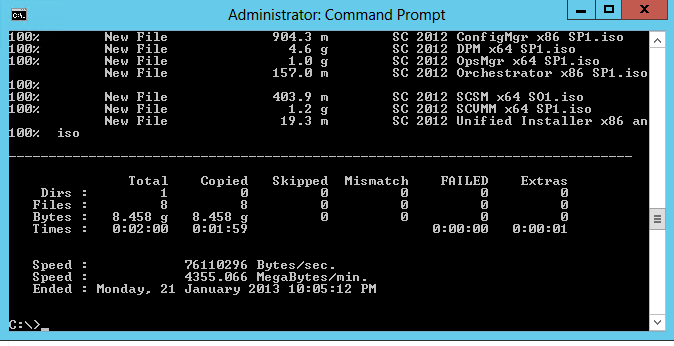

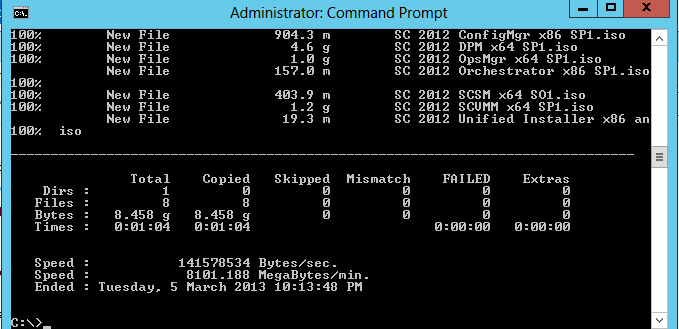

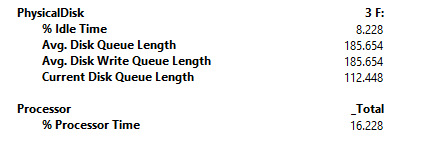

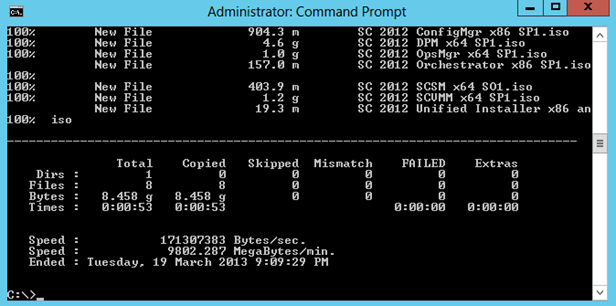

Copy Time

Copy times have gone down. From 1:16 to 1:04 and 2:00 to 1:20 respectively. So better, but not that much better. I expected more given it's now got twice the bandwidth.

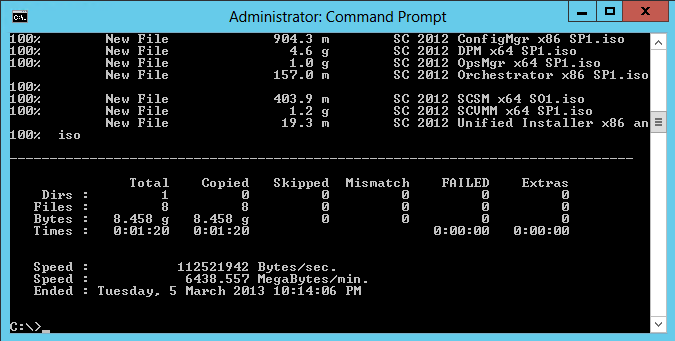

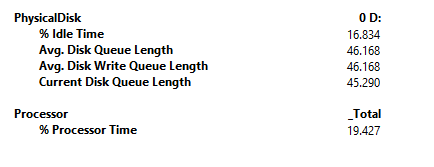

Network Util

So, breaking over the half Gb mark, but not my much. Very choppy graph, not coming anywhere near saturating the network. The graph from the other host showed the same.

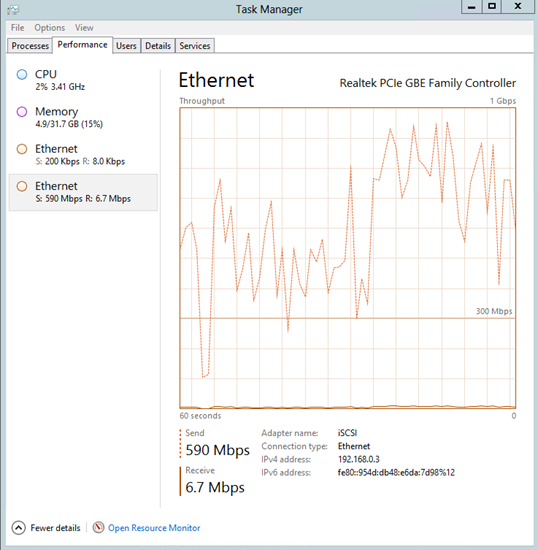

Disk Queue

Woah, where the hell did that number come from! From 0.8 to 185.6. That little bit of extra network has pushed the disk over the edge. Just goes to show, gravity's unforgiving when you drop off the edge of the performance cliff. Time to put in an SSD and run the test again.

Test 4

Copy of test data from both Hyper-V hosts to the iSCSI server over two dedicated 1Gb links at the same time using an SSD drive as the target. The copy was done to separate directories on the iSCSI Server.

Configuration

Component |

Config |

Hyper-V Host NICs |

1 x dedicated 1Gb for iSCSI (one per host) |

iSCSI Server NICs |

2 x dedicated 1Gb for iSCSI |

iSCSI Server disks |

1 x 256GB SSD |

Copy Time

Copy times have gone down. From 1:04 to 53 seconds and 1:20 to 51 seconds respectively. An improvement of roughly 57% on the second node. It's also noteworthy the copy times are more aligned between the two nodes.

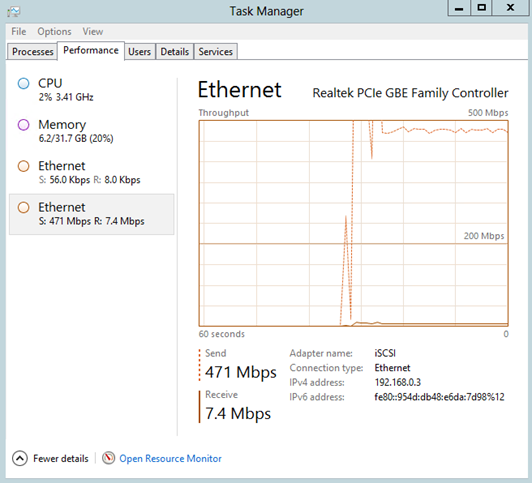

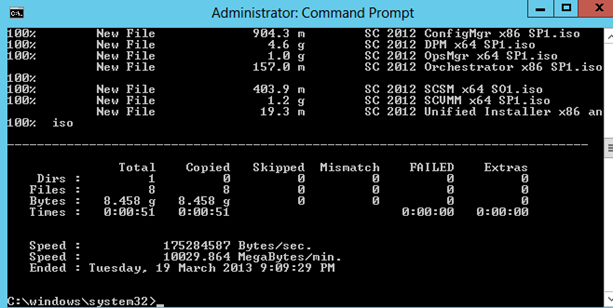

Network Util

Not reaching full saturation at 1Gb and still a bit choppy, but much better sustained throughput than before. Given the network is not being saturated, there must still be a disk bottleneck.

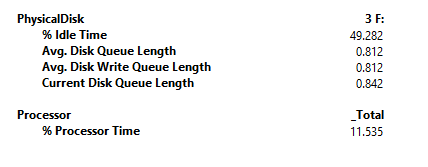

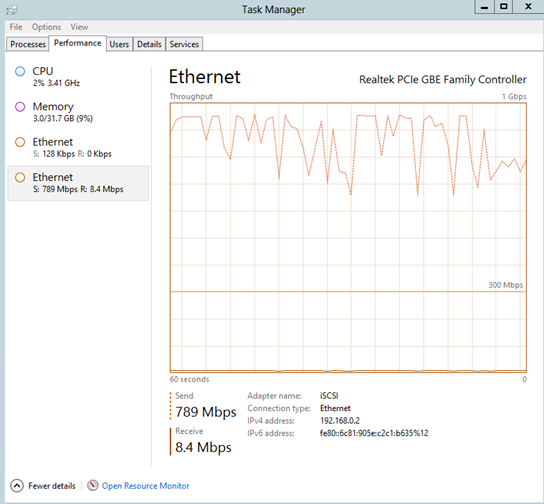

Disk Queue

Disk queue at 46, so I'm still disk bound (I suspect I might be controller bound). So not perfect but much better than 185!

I think I've found the configuration sweet spot from a performance point of view (and staying within budget). I hope you found it useful

In the next post, I'll cover how I have configured the virtualisation layer, where VMs are placed, and how to maximise read performance using the CSV block cache.