Practical Architectures Part 2: Scale Out File Servers & Firewalls: How to design your SOFS & Network topology

This one has been a long time in coming since my last blog post (almost 10 months to be precise) </grin>, but that's simply due to the amount of field deployments and engagements I have at hand. Finally am on my (well deserved) vacation from tomorrow, but going to spam the blog with a few posts before I head out with the family.

My last post dealt with SOFS & Firewalls, and I'm following that up with how to design the storage connectivity in a highly secured deployment (for the paranoid). If you see the architecture below, it represents what you could actually apply in a Production scenario (without the need for firewalls :P )

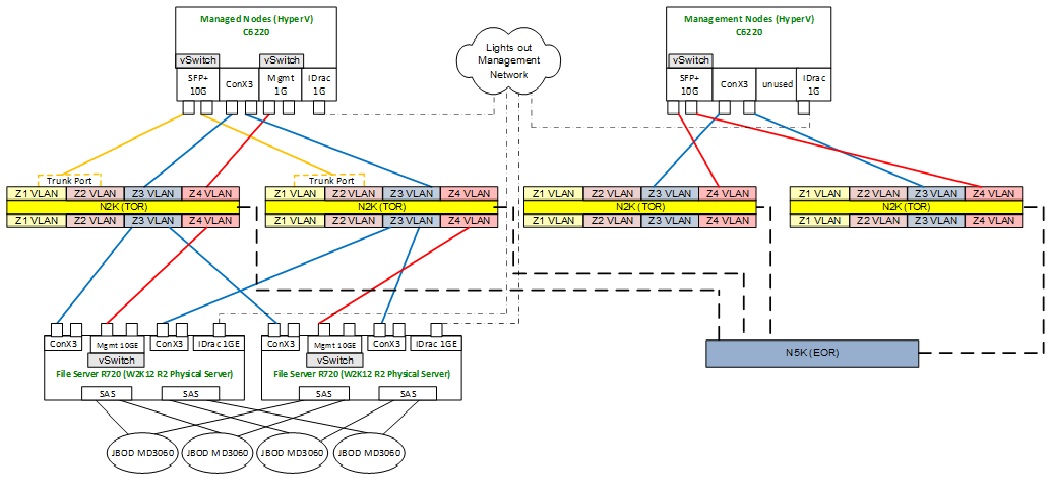

Network Architecture Diagram:

What you see here is a deployment based on Dell Hardware with the C6220 as Compute, SFP+10G capable switches, and MD3060E JBOD's as the Storage; and Cisco N2K TOR switches, while the Cisco N5K serves as an EOR

The way the network flow is structured is as follows:

Note: This architecture is formulated from the Microsoft Hybrid Cloud Reference Architecture, but devolved specifically to cater to a high security zone deployment.

1. We have created 4 VLANS on the N2K TOR switches to map to the Managed Nodes and SOFS. We are also Trunking the Z1 & Z2 VLANS, with multiple redundant links from the SOFS+JBOD's to the Managed Hyper-V nodes' VLANS.

2. SOFS authenticates with AD in Z3 VLAN through the RDMA link

3. SOFS RDMA link can ideally stay in the Z3 VLAN that we have created on the switch, but given the nature of the deployment, our recommendation is to have a separate Management Link to the Z4 VLAN, where the Master AD resides

4. The Hyper-V Management Fabric traffic flows thrugh the same SFP+10G links, connecting to the Z4 VLAN. There is no need to have separate 1GE ports

So what do you achieve with this? Quite a bit actually. You Decouple the Storage from the Compute, and lose the complexity of iSCSI or FC. At a high level, using commodity storage like JBOD's is what Hyper-Scale Cloud Service Providers like Microsoft do.

What other benefits do you get?

Let Windows do the work that was initially the fiefdom of expensive SAN technologies, you end up saving a TON of money, with more control.

Data delivery via standard protocol:

- SMB 3.0

Load-balancing and failover:

- Teaming (switch agnostic)

Load aggregation and balancing:

- SMB multi-channel

Commodity L2 switching:

- Cost effective networking (Ethernet)

- RJ45

- QSFP

Quality of Service:

- Multiple-levels

Host workload overhead reduction:

- RDMA

Scale Easily thanks to Software Defined Networking, while getting more granular control.

Hope this helps you to design, or even replicate some of the network topology in your environments (If you're feeling lazy :) ) based on what I've given above

Cheers!